Family source list WIP part 1

In the interest of working more with the garage door up, I’m going to write this as I work on this project, starting at the beginning. (Rather than just posting about it when it’s done.)

The idea is to have some kind of list (chart isn’t the right word) where I can put what I know about a family (genealogy-wise) and how I know it (which sources provide evidence). I want the output format to be PDF so it’s easily printable/archivable.

At this point I have a rough picture in my head of what it might look like:

- Headings (one for each person, probably some more general groups too), and then under each heading a list of facts (birth date, place, marriage, kids, etc.) with the sources for each.

- Some sources are used more than once, so having some way to simplify that (a table of sources, maybe) would be good.

- Also, some way to mark a fact as less sure (more of a supposition). This makes me think that “fact” is probably the wrong word to use here. “Assertion” makes sense but also feels a bit much. “Point,” maybe? This is almost certainly only going to be used internally (I’m not planning to put the word on the list itself), but I try to get the nomenclature right for my own sake. A person has a list of points? “Attribute” feels more correct but also too technical.

- As far as the supposition aspect goes, I don’t know yet if I want a binary (unproven, proven) or a gradient (0% sure, 50% sure, 100% sure, for example). Probably going to start with a binary to keep things simpler.

While it’s very tempting right now to get the code environment set up and start mocking things up directly in HTML (since that’s what I’m using to go to PDF), I’m going to make myself write some mocks by hand first instead. And then maybe do a quick iteration in Google Docs.

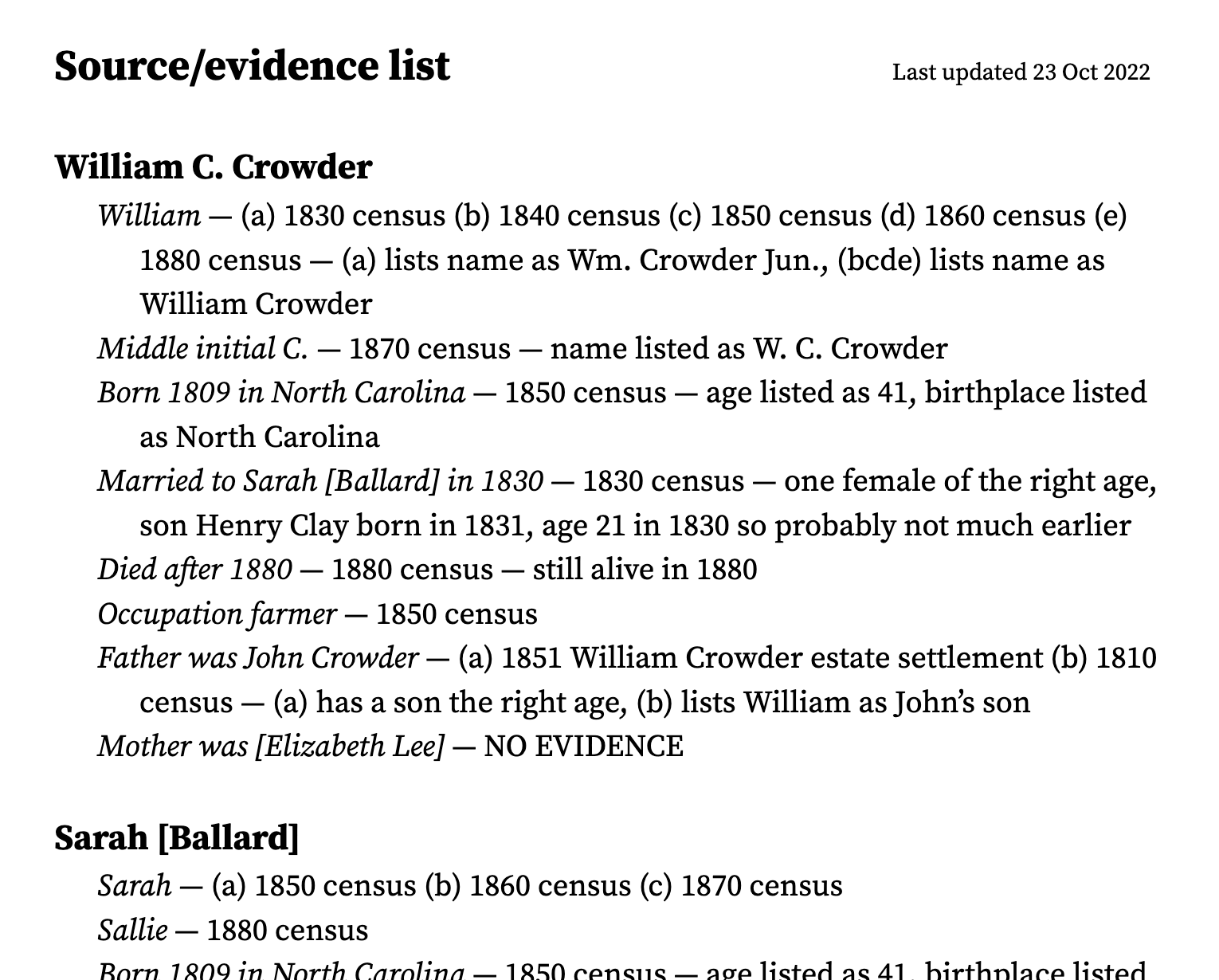

Okay, I ended up going straight to Google Docs, which worked out well since I went through seven or eight iterations and I’m still not there yet. Current status (keeping in mind that this is more about sussing out how to lay out the information and is far from a finished design):

Notes:

- The format right now for each line is “fact/supposition/point — sources — reasoning”.

- If there’s more than one source, we apply the abcde etc. naming so we can easily and succinctly distinguish between them in the reasoning section.

- The reasoning section is optional if it’s straightforward based on the source

- I initially had a table of sources included at the end but it felt like overkill. At the point/line level, it seems better to have the actual source description (“1850 census”) rather than some cryptic reference to the table like “[12]”. Then the user doesn’t have to keep going back and forth to figure out what the sources are.

- I’m thinking of grouping things better when I do the final design, but I’m not worrying about that any more for now.

- I haven’t yet gotten to the suppositions/assertions.

Also, I’ve decided to call this a family source list. More to come!